Improving Quality of Life for Locked-In Syndrome Patients

Reducing diagnosis time from 78 days to 0 and improving doctor-patient knowledge transfer using audiovisual communication

Locked-In Syndrome (LiS) defines a group of individuals who are fully paralyzed with the exception of their vertical eye movements and intact cognition. This means they are fully aware but unable to communicate, move, or eat independently.

Scroll to view my process or…

Want to skip right to the solutions? Click the buttons below:

The challenge

For my masters degree applied project, I was tasked with solving a problem of my choice using immersive design and human-centered principles.

Project specs:

Create a prototype to solve for identified problem

16 weeks of research

16 weeks of design

Outcomes & Deliverables

Toolkit for knowledge transfer, motivation & communication

A total of 9 solutions have been identified as potentially feasible and helpful for this population. These solutions have been analyzed, pros and cons have been delineated, and levels of solution confidence have been assigned to each one. I have decided to focus on the first 2 for my applied project.

List of solutions ordered by confidence and priority:

An orientation video to inform, motivate, and empower patients

A nurse call button via eye gaze

The research

Mapping passions, identifying a problem

Upon beginning my Fall 2024 semester, I had an idea for a VR game. It was something of mild interest to me—my professor suggested I select a topic I’m passionate about. In order to “find my problem”, I mapped out the topics I’m most interested in. These interests intersected at the following topics:

Communication

Helping people who cannot speak

AAC (Augmentative and Alternative Communication devices)

After identifying these, I investigated what populations I could help and returned the following:

autistic individuals

locked-in syndrome

The problem I thought I was solving? Mental health (anxiety and depression) for those with Locked-in Syndrome.

Luckily, I started a literature review to validate my assumption that mental health was the core problem…because I was wrong! It was only the tip of the iceberg. More on that later…

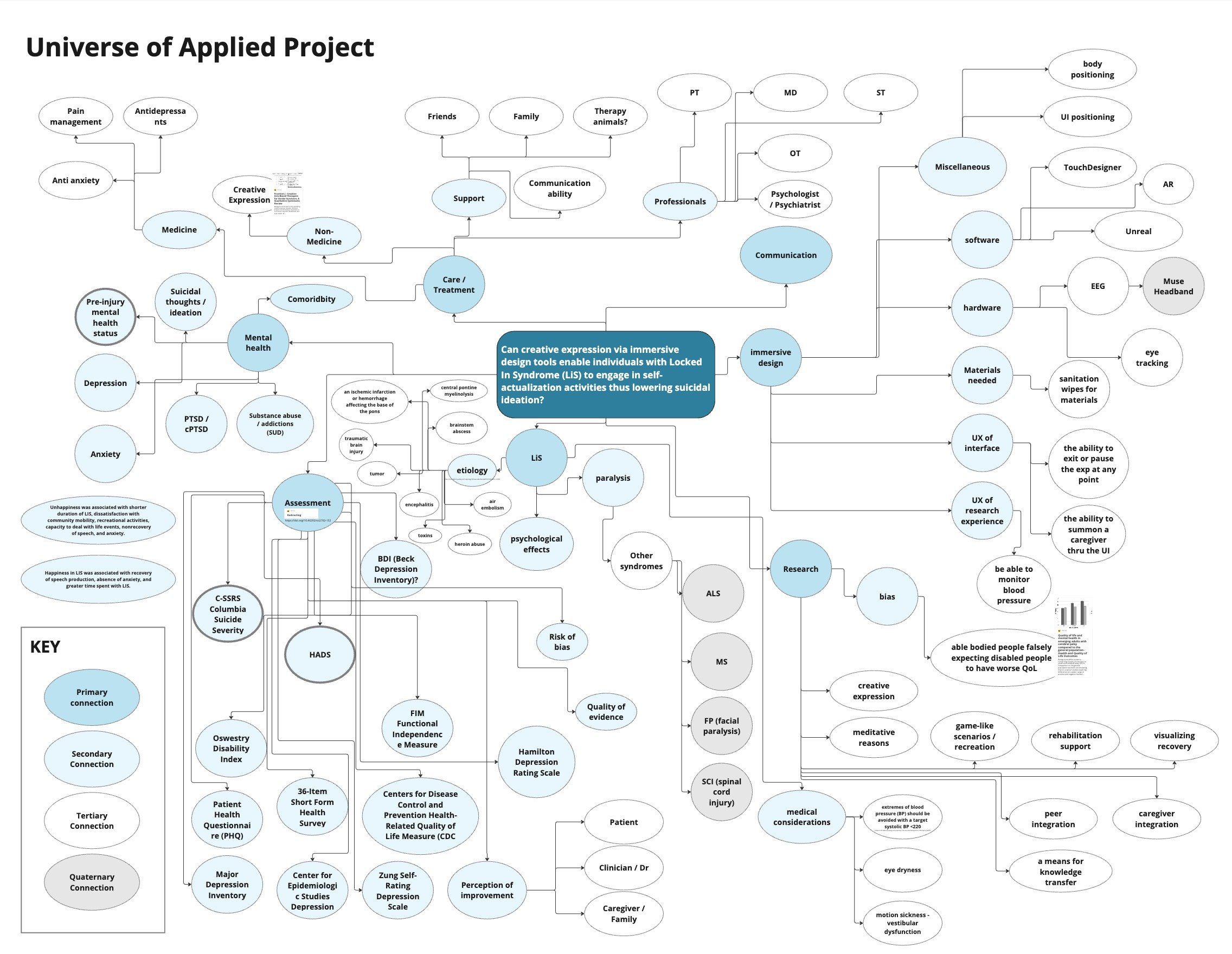

2. Literature review, understanding universe of LiS

The literature review allowed me to investigate the universe of locked-in syndrome, to explore its connections, to understand the existing research, to identify areas in which I was knowledgable and lacked knowledge, and to identify gaps and opportunities.

3. Personas

The patient

The doctor

The support system / family

4. User journey

The user journey mapping allowed me to visually understand the journey of LIS from injury/incident to diagnosis to treatment which helped me identify gaps in my knowledge. In doing this, I was able to understand the people, processes, and tools at each stage and solution accordingly.

This is when I discovered that decreased mental health was the result of 3 things:

lack of knowledge of the diagnosis (knowledge transfer)

lack of expectation setting for recovery (acceptance)

lack of a communication method (communication)

This discovery shaped and reshaped my solutioning.

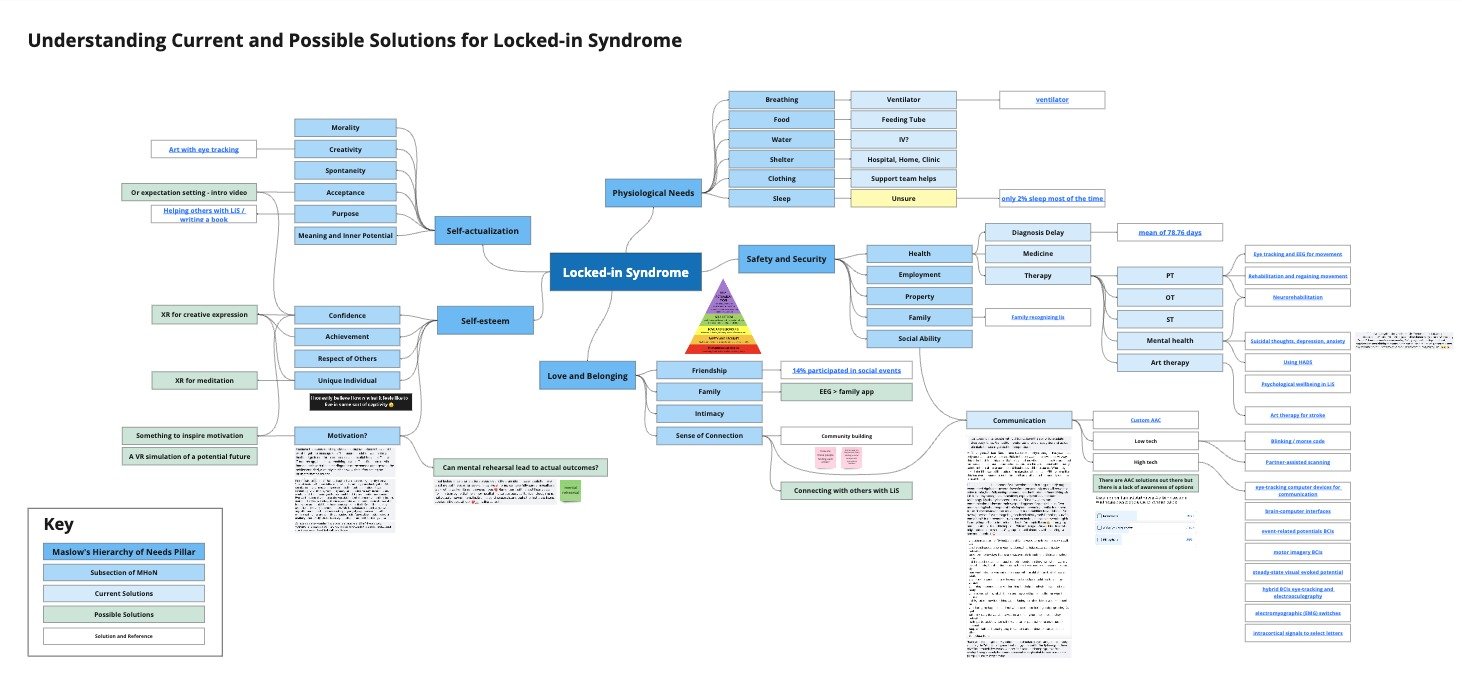

5. Map needs to current solutions & identify possible solutions

Using Maslow’s Hierarchy of Needs, I mapped out the needs of patients, existing solutions, and began developing my own solutions.

6. Ideate & conceptualize

Then, I took to the whiteboard (highly caffeinated). Here is a little peek into my initial scribbles and ideas. This was the basis of the 9 solutions identified.

7. Plan and prioritize solutions

9 solutions were identified and mapped to persona, pain point, and goal. The solution was described and then sketched. And lastly, a solution confidence score was assigned to each. This score (1-10) was based on how confident I was that I was solving the right problem in the right way (10 being the highest confidence).

The solutions

And lastly, I developed the top 2 solutions as the deliverables for my master’s applied project. Details below.

Locked-in Syndrome Informational Video (Solution 1)

The what

This solution is called the “Locked-in Syndrome Informational Video” and is meant to:

inform patients of their diagnosis (empowerment)

assist with doctor-patient communication (knowledge transfer)

provide resources for how to navigate insurance and assistive devices

inspire and foster hope and motivation

The why

In the literature review, the following pain points were identified: effective doctor-patient communication, knowledge of diagnosis, expectation setting, and a need for connection with others for motivation and inspiration.

Therefore, it was hypothesized that a video (controlled by eye gaze to foster independence) would target these pain points and lead to better quality of life for patients recently diagnosed with LIS.

The how

I used Unreal Engine 5.4.4 to create this video as eye tracking technology can be paired with this software. While the eye tracking functionality is pending at the current moment, the idea is that patients would be able to control the video either with a Tobii eye tracking device on top of the computer/tv, or the patient can use advanced AR glasses (lightweight) with integrated eye tracking technology.

In-Hospital Eye-Gaze Nurse Call Button (Solution 2)

The what

This solution is called the “In-Hospital Eye-Gaze Nurse Call Button” and is meant to:

allow patients to independently request assistance using eye gaze

expedite diagnosis time

allow nurses to return to their typical workflow

The why

Currently, call buttons function with motor movements where the patient needs to press a button to call the nurse. Assistive devices exist that allow patients to call using a switch or a breath-based switch but these will not work for those who are completely paralyzed. One solution is calling for assistance with eye gaze using AAC (Augmentative and Alternative Communication) though this can take weeks to months to be prescribed by a speech-language pathologist. This benefits to this solution are as follows:

it is free

it is immediate, functioning, and open source

is browser-based and only needs your device’s camera to operate

The how

I teamed up with ASU professor Dr. Tejaswi Gowda, used his JavaScript code (using Media Pipe) and altered the frontend UI to match my solution needs. The following technology was used:

JavaScript

HTML

CSS

Media Pipe for eye detection

VS Code

GitHub

Reflections

This project challenged me in various ways. It challenged my technical skills and what I thought I was capable of. Before starting this project, I had little-to-no experience with Unreal Engine or coding. I’m proud that I persevered and allowed the problem-solution to drive the outcomes rather than my technical skills.

It also challenged me to be ok with rethinking, restarting, and ensuring I am on the right path. I started this project thinking I was creating a language learning app in VR. Then, I learned about LIS and decided I wanted to solution for this (but did not have any solutions prior to the literature review). I was proceeding with the faith that if I was dedicated enough to learning and research, that the right solutions would be clear.

I also had to address my own biases, have humility, and be prepared to be ok with being wrong and pivoting. I originally thought I was solving for anxiety and depression. But, the research informed me that there were more urgent needs that were more core to the problem. I had to be ok with deprioritizing my initial concepts (because my ideas are not precious) and developing other solutions.

All in all, I am content with the outcome and seeking to continue to pursue this route of solutioning either professionally or as a “hobbyist” for a passion project.

Gratitude

This project would not exist without the encouragement, support, and guidance from my committee members:

Sven Ortel, Professor of Practice, ASU

Laura Cechanowicz, Assistant Professor, ASU

Dr. Tejaswi Gowda, Assistant Professor, ASU

I want to extend my immense gratitude to my committee for their guidance in my research and for taking the time to serve as committee members. Each of them has spent time with me thinking through research, design decisions, and technical avenues and solutions. This project would not exist without their guidance and encouragement.

Specifically, I want to thank Sven for encouraging me to invest time in discovering a topic I am deeply passionate about and consistently being available and present for analysis and direction for the past two semesters. I want to thank Laura for offering an invaluable perspective and being so adept at connecting me with individuals in the industry from whom I can glean further insights. And, Tejaswi for being so gracious with his brilliant technical skills and allowing me to utilize his eye gaze technology and alter it to fit this specific use case. It’s professors like this who make education a meaningful and joyful experience, and I am grateful for their willingness to be on my committee.

Additionally, I want to thank my peers, Julianna Piechowicz and JoAnn Lujan. Julianna provided advice and expertise pertaining to code and UI design and was such an invaluable thought partner. JoAnn was gracious to offer her time and expertise in audio and sound to help me record the voice overs. It’s incredibly humbling to know this support exists, and I hope to pay it forward.